It extracts all the URLs from a web page. URL encoding converts non-ASCII characters into a format that can be transmitted over the Internet. the page of each book) to scrape data from it. Why? Because you need to start by one page (e.g.

response: Great! Your internet connection works, your URL is correct you are allowed to access this page.url: It is the website/page you want to open.Needless to say, variable names can be anything else we care more about the code workflow. I will start by talking informally, but you can find the formal terms in comments of the code.

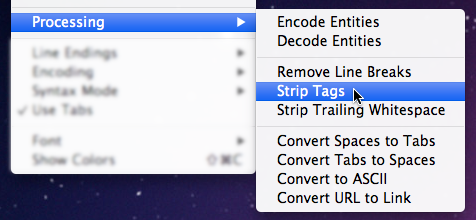

Many websites especially those that have. However, there are a couple of downsides to this method. This is the closest way to actually copy a website. CURLEFAILEDINIT (2) Early initialization code failed. The support might be a compile-time option that you did not use, it can be a misspelled protocol string or just a protocol libcurl has no code for. This allows you to view the actual HTML and CSS used by the site you wish to copy, before testing locally and offline. The URL you passed to libcurl used a protocol that this libcurl does not support.

#Copy url coda 2 update#

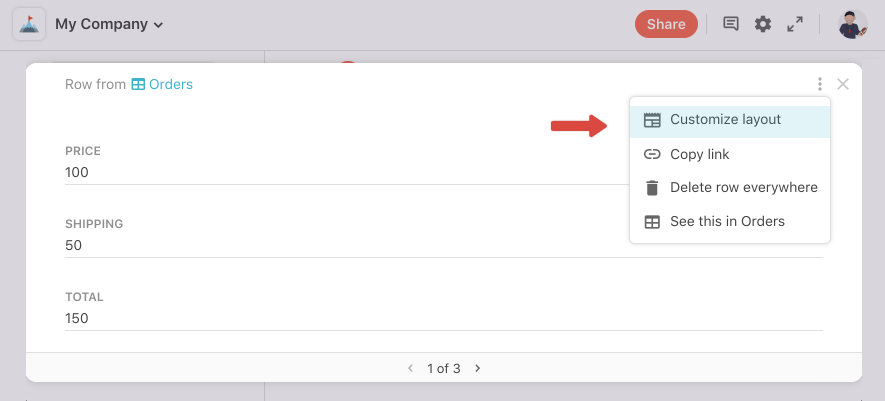

It should detect your Mac App Store copy and pop-up a migration dialog. You can then view the code and update your own as desired. We’ve made Mac App Store migration painless: Download Coda 2.5 Launch it. (One of the major causes for Coda 2.5’s delay was wrestling with sandboxing.) But don’t worry.

#Copy url coda 2 how to#

After installing the required libraries: BeautifulSoup, Requests, and LXML, let’s learn how to extract URLs. As you may know, Coda 2.5 is not available in the Mac App Store.

0 kommentar(er)

0 kommentar(er)